As we continue to help customers build and deploy their Railtracks agents to production, we’re seeing a common pain point: how to monitor, manage, and audit their agents in production.

This is why we’ve built Conductr Agent Observability. It gives developers and DevOps complete visibility into your production agents with real-time monitoring, cost tracking, and reasoning traceability. Getting started only takes a few minutes

Getting Started

Prerequisites

- You have a working Railtracks agent locally

- You’re ready to deploy it to production (Docker, Azure Functions, AWS Lambda)

Step 1: Install the SDK

Add railtownai to your requirements.txt of your Railtracks agent project (usually at the root of your repository).

You can also use pip to install it:

pip install railtownai

Step 2: Sign up for Conductr Agent Observability

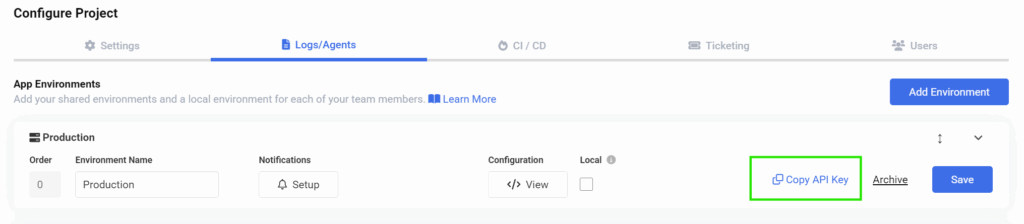

Sign up for free account at https://conductr.io/solutions/agent-observability and get your API key from your Project Configuration page.

Step 3: Add Observability to Your Agent

Here’s a complete example of a customer support agent with observability enabled:

import logging

import railtownai

import railtracks as rt

# Initialize Railtown AI

railtownai.init('YOUR_RAILTOWN_API_KEY')

# To create your agent, you just need a model and a system message.

Agent = rt.agent_node(

llm=rt.llm.OpenAILLM("gpt-4o"),

system_message="You are a helpful weather agent that can answer questions about the weather."

)

async def main():

async with rt.Session(name="weather-agent-session") as session:

result = await rt.call(Agent, "What is the weather in Tokyo?")

agent_run_data = session.payload()

railtownai.upload_agent_run(agent_run_data)

Step 4: Verify in Conductr

Run your agent and check your Conductr Agent Runs page to see the observability data flowing in.

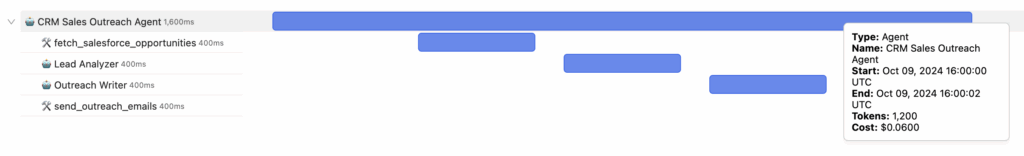

In this example, this is a simple CRM Sales Outreach Agent that fetches opportunities from a database, analyzes the opportunities, and generates outreach messages.

In the example below, we have a weather agent that retrieves the current weather in Vancouver from a weather tool, tries to pass the response to a Structured LLM Agent and indicates that it failed.

What You'll See in Conductr

Once your agent is running with observability enabled, you’ll have access to:

- Real-time agent runs dashboard with history – See all your agent executions stored in one place where you can filter and compare dates

- Per-run breakdown – Token usage, cost, latency, and success rate for each run

- Step-by-step reasoning trace – Full visibility into how your agent made decisions

- Error logs with full stack traces – Debug issues quickly with complete context

- Environment filtering – Compare behaviour across different deployment environments

railtracks viz.

Additionally, you can join our community on Discord where we help each other build production-ready agents and share knowledge.